Cybersecurity Snapshot: Expert Advice for Boosting AI Security

With businesses going gaga for artificial intelligence, securing AI systems has become a key priority and a top challenge for cybersecurity teams, as they scramble to master this emerging and evolving field. In this special edition of the Cybersecurity Snapshot, we highlight some of the best practices and insights that experts have provided so far in 2025 for AI security.

In case you missed it, here’s fresh guidance for defending AI systems against cyber attacks.

1 - Best practices for locking down AI data

If your organization is looking for recommendations on how to protect the sensitive data powering your AI systems, check out new best practices released in May by cyber agencies from Australia, New Zealand, the U.K. and the U.S.

“This guidance is intended primarily for organizations using AI systems in their operations, with a focus on protecting sensitive, proprietary or mission-critical data,” reads the document titled “AI Data Security: Best Practices for Securing Data Used to Train & Operate AI Systems.”

“The principles outlined in this information sheet provide a robust foundation for securing AI data and ensuring the reliability and accuracy of AI-driven outcomes,” it adds.

By drafting this guidance, the authoring agencies seek to accomplish three goals:

- Sounding the alarm about data security risks involved in developing, testing and deploying AI systems

- Arming cyber defenders with best practices for securing data throughout the AI lifecycle

- Championing the adoption of robust data-security techniques and smart risk-mitigation strategies

Here’s a sneak peek at some of the key best practices found in the comprehensive 22-page document:

- Source smart: Use trusted, reliable data sources for training your AI models, and trace data origins with provenance-tracking.

- Guard integrity: Employ checksums and cryptographic hashes to protect your AI data during storage and transmission.

- Block tampering: Implement digital signatures to prevent unauthorized meddling with your AI data.

For more information about AI data security, check out these Tenable resources:

- “Harden Your Cloud Security Posture by Protecting Your Cloud Data and AI Resources” (blog)

- “Tenable Cloud AI Risk Report 2025” (report)

- “Who's Afraid of AI Risk in Cloud Environments?” (blog)

- “Tenable Cloud AI Risk Report 2025: Helping You Build More Secure AI Models in the Cloud” (on-demand webinar)

- Know Your Exposure: Is Your Cloud Data Secure in the Age of AI? (on-demand webinar)

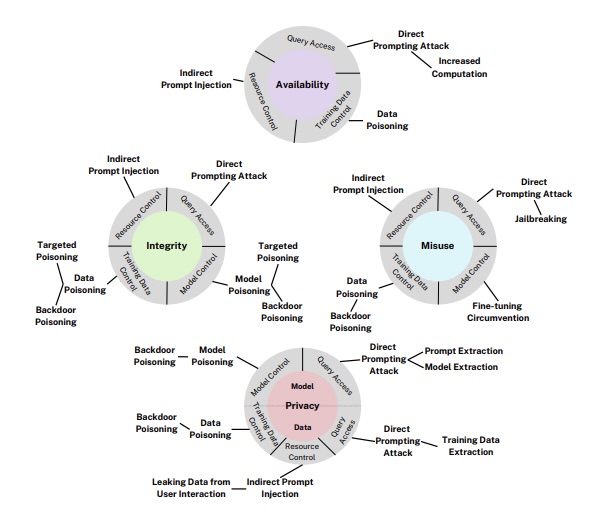

2 - NIST categorizes attacks against AI systems, offers mitigations

Der National Institute of Standards and Technology (NIST) is also stepping up to help organizations get a handle on the cyber risks threatening AI systems. In March, NIST updated its “Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations (NIST AI 100-2)” report, first published last year.

The massive 127-page publication includes:

- A detailed taxonomy of adversarial machine-learning (AML) attacks, such as evasion, poisoning, and privacy attacks against both predictive AI systems and generative AI systems; and of AML attacks targeting learning methods

- Potential mitigations against AML attacks, along with a realistic look at their limitations

- Standardized AML terminology, along with an index and a glossary

“Despite the significant progress of AI and machine learning in different application domains, these technologies remain vulnerable to attacks,” reads a NIST statement. “The consequences of attacks become more dire when systems depend on high-stakes domains and are subjected to adversarial attacks.”

For example, to counter supply chain attacks against generative AI systems, NIST recommendations include:

- Verifying data integrity: Before using data from the web to train AI models, make sure it hasn't been tampered with. A basic integrity check involves the data provider publishing cryptographic hashes and the downloader verifying the training data.

- Filtering out poison: Implement data filtering to remove any poisoned data samples.

- Scanning for weaknesses: Conduct vulnerability scans of model artifacts.

- Spotting backdoors: Use mechanistic interpretability methods to identify sneaky backdoor features.

- Designing for resilience: Build generative AI applications in a way that reduces the impact of model attacks.

Taxonomy of Attacks on GenAI Systems

(Source: “Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations” report from NIST, March 2025)

The report is primarily aimed at those in charge of designing, developing, deploying, evaluating and governing AI systems.

For more information about protecting AI systems against cyber attacks:

- “Understanding the risks - and benefits - of using AI tools” (U.K. NCSC)

- “Hacking AI? Here are 4 common attacks on AI” (ZDNet)

- “Best Practices for Deploying Secure and Resilient AI Systems” (Australian Cyber Security Centre)

- “Adversarial attacks on AI models are rising: what should you do now?” (VentureBeat)

- “OWASP AI Security and Privacy Guide” (OWASP)

- “How to manage generative AI security risks in the enterprise” (TechTarget)

3 - ETSI publishes global standard for AI security

Seeking to bring clarity to the proper way to secure AI models and systems, the European Telecommunications Standards Institute (ETSI) in April published a global standard for AI security designed to cover the full lifecycle of an AI system.

Aimed at developers, vendors, operators, integrators, buyers and other AI stakeholders, ETSI’s “Securing Artificial Intelligence (SAI); Baseline Cyber Security Requirements for AI Models and Systems” technical specification outlines a set of foundational security principles for an AI system’s entire lifecycle.

Developed hand-in-hand with the U.K. National Cyber Security Center (NCSC) and the U.K. Department for Science, Innovation & Technology (DSIT), this document breaks down AI system security into five key stages and 13 core security principles:

- Secure design stage

- Raise awareness about AI security threats and risks.

- Design the AI system not only for security but also for functionality and performance.

- Evaluate the threats and manage the risks to the AI system.

- Make it possible for humans to oversee AI systems.

- Secure development stage

- Identify, track and protect the assets.

- Secure the infrastructure.

- Secure the supply chain.

- Document data, models and prompts.

- Conduct appropriate testing and evaluation.

- Secure deployment stage

- Communication and processes associated with end-user and affected entities.

- Secure maintenance stage

- Maintain regular security updates, patches and mitigations.

- Monitor system behavior.

- Secure end-of-life stage

- Ensure proper data and model disposal.

- Ensure proper data and model disposal.

Each one of the 13 security principles is further expanded with multiple provisions that detail more granular requirements.

For example, in the secure maintenance stage, ETSI calls for developers to test and evaluate major AI system updates as they would a new version of an AI model. Also in this stage, system operators need to analyze system and user logs to detect security issues such as anomalies and breaches.

And if you’re hungry for more technical nitty-gritty, the 73-page companion report “Securing Artificial Intelligence (SAI): Guide to Cyber Security for AI Models and Systems” offers a treasure trove of detail for each provision.

Together the technical specification and the technical report “provide stakeholders in the AI supply chain with a robust set of baseline security requirements that help protect AI systems from evolving cyber threats,” reads an NCSC blog.

For more information about AI security best practices, check out these Tenable blogs:

- “Securing the AI Attack Surface: Separating the Unknown from the Well Understood”

- “Do You Think You Have No AI Exposures? Think Again”

- “How to Discover, Analyze and Respond to Threats Faster with Generative AI”

- “Never Trust User Inputs -- And AI Isn't an Exception: A Security-First Approach”

- “AI Security Roundup: Best Practices, Research and Insights”

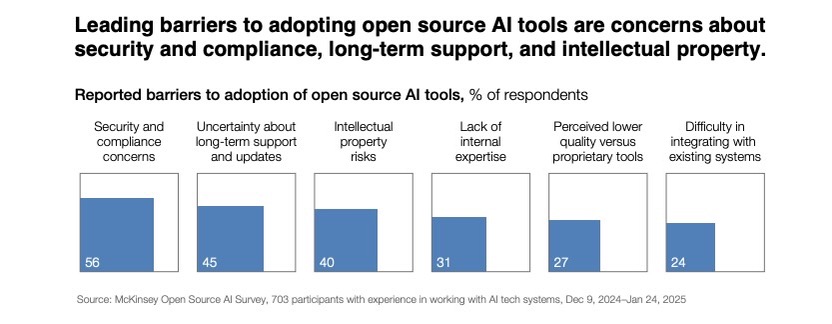

4 - Study: Open source AI triggers cyber risk concerns

As organizations increasingly adopt open-source AI technologies, they also worry about facing higher risks than those posed by proprietary AI products.

That’s according to the report “Open source technology in the age of AI” from McKinsey Co., the Patrick J. McGovern Foundation and Mozilla, based on a global survey of 700-plus technology leaders and senior developers.

Specifically, while respondents cite benefits like lower costs and ease of use, they consider open source AI tools to be riskier in areas like cybersecurity, compliance and intellectual property.

If your organization is looking at or already adopting open source AI products, here are risk mitigation recommendations from the report, published in April:

- Deploy strong guardrails: Think automated content filtering, input/output validation, and that all-important human oversight.

- Assess consistently: Use standardized benchmarks to conduct regular risk assessments.

- Run in trusted zones: Keep your AI models safe by running them in trusted execution environments.

- Fortify repositories: Protect your model repositories with strong access controls.

- Segment your networks: Create separate networks for training and inference servers.

- Verify trustworthiness: Always confirm models come from trusted repositories using cryptographic hash verification.

For more information about open-source AI’s cybersecurity:

- “Predictions for Open Source Security in 2025: AI, State Actors, and Supply Chains” (OpenSSF)

- “Frequently Asked Questions About DeepSeek Large Language Model (LLM)” (Tenable)

- “Mapping the Open-Source AI Debate: Cybersecurity Implications and Policy Priorities” (R Street)

- “With Open Source Artificial Intelligence, Don’t Forget the Lessons of Open Source Software” (CISA)

- “Open Source AI Models: Perfect Storm for Malicious Code, Vulnerabilities” (DarkReading)

5 - Tenable: Orgs using AI in the cloud face thorny cyber risks

Using AI tools in cloud environments? Make sure your organization is aware of and prepared for the complex cybersecurity risks that emerge when you mix AI and the cloud.

That’s a key message from the “Tenable Cloud AI Risk Report 2025,” released in March and based on a telemetry analysis of public cloud and enterprise workloads scanned through Tenable products.

“Cloud security measures must evolve to meet the new challenges of AI and find the delicate balance between protecting against complex attacks on AI data and enabling organizations to achieve responsible AI innovation,” Liat Hayun, Tenable’s VP of Research and Product Management for Cloud Security, said in a statement.

Key findings from the report include:

- 70% of cloud workloads with AI software installed have at least one critical vulnerability, compared with 50% of cloud workloads that don’t have AI software installed.

- 77% of organizations have the overprivileged default Compute Engine service account configured in Google Vertex AI Notebooks – which puts all services built on this default Compute Engine at risk.

- 91% of organizations using Amazon Sagemaker have the risky default of root access in at least one notebook instance, which could grant attackers unauthorized access if compromised.

These are some of the report's risk mitigation recommendations:

- Take a contextual approach for revealing exposures across your cloud infrastructure, identities, data, workloads and AI tools.

- Classify all AI components linked to business-critical assets as sensitive, and include AI tools and data in your asset inventory, scanning them continuously.

- Keep current on emerging AI regulations and guidelines, and stay compliant by mapping key cloud-based AI data stores and implementing required access controls.

- Apply cloud providers' recommendations for their AI services, but be aware that default settings are commonly insecure and guidance is still evolving.

- Prevent unauthorized or overprivileged access to cloud-based AI models and data stores.

- Prioritize vulnerability remediation by understanding which CVEs pose the greatest risk to your organization.

To get more information, check out:

- The full “Tenable Cloud AI Risk Report 2025”

- The on-demand webinar “2025 Cloud AI Risk Report: Helping You Build More Secure AI Models in the Cloud”

- The blog “Who's Afraid of AI Risk in Cloud Environments?”

6 - SANS: Six critical controls for securing AI systems

SANS Institute has also jumped into action to help cyber defenders develop AI security skills and strategies, publishing draft guidelines for AI system security in March.

The “SANS Draft Critical AI Security Guidelines v1.1” document outlines these six key security control categories for mitigating AI systems' cyber risks.

- Access-control methods, including:

- Least privilege, for ensuring that users, APIs and systems have the minimum-necessary access to AI systems

- Zero trust, for vetting all interactions with AI models

- API monitoring, for flagging potentially malicious API usage

- Protections for AI operational and training data, including:

- Data integrity of AI models

- Prevention of tampering with AI prompts

- Secure deployment decisions, including:

- On-premises versus cloud, based on criteria like performance expectations and regulatory requirements

- Development environments integrated with large language models (LLMs) that don't expose secrets, such as API keys and algorithms

- Inference security for preventing malicious input attacks, including:

- Adoption of response policies for AI outputs

- Prompt filtering and validations for mitigating prompt injection attacks

- Continuous monitoring of AI models, including:

- Refusal of inappropriate queries

- Detection of unauthorized model changes

- Logging of prompts and outputs

- Governance, risk and compliance for complying with data protection and privacy regulations, including:

- Adoption of AI risk management frameworks

- Maintaining an AI bill of materials to track AI supply chain dependencies

- Use of model registries to track AI model lifecycles

“By prioritizing security and compliance, organizations can ensure their AI-driven innovations remain effective and safe in this complex, ever-evolving landscape,” the document reads.

In addition to the six critical security controls, SANS also offers advice for deploying AI models, recommending that organizations do it gradually and incrementally, starting with non-critical systems; that they establish a central AI governance board; and that they draft an AI incident response plan.

For more information about AI security controls:

- “The AI Supply Chain Security Imperative: 6 Critical Controls Every Executive Must Implement Now” (Coalition for Secure AI)

- “OWASP AI Exchange” (OWASP)

- “AI Safety vs. AI Security: Navigating the Commonality and Differences” (Cloud Security Alliance)

- “The new AI imperative is about balancing innovation and security” (World Economic Forum)

- “AI Data Security” (Australian Cyber Security Centre)

- “The Rocky Path of Managing AI Security Risks in IT Infrastructure” (Cloud Security Alliance)

- Cloud

- Cybersecurity Snapshot